SAP case studies

Voice to Insight & Analytics Catalog

Context:

Through 16 months as a UX Design Intern, I worked on two different teams, which include the Platform team (May 2019 - Dec 2019), along with the mobile team (Jan 2020 - Aug 2020).

I worked on SAP Analytics Cloud, which provides end-to-end analytics for business, helping them make decisions through their data through visual dashboards. This product combines BI, planning, predictive, augmented analytics, and machine learning capabilities through the cloud.

Here are two projects I worked on during my time there:

project 1

Voice to Insight

tldr, what's this project about?

Helping businesses gain AI insights on their data through voice search

🎯 Business Goal:

Deliver the ability for customers to quickly generate charts on datasets by a voice query

Role:

Sole designer responsible for delivering the strategy and final design for iOS

Team:

1 product manager, 3~ developers, design manager

Timeline + Scope

4 months

Success Metrics:

Delivering the top feature request

This was the v1 scope of one of the top customer requests for the quarter.

Overview

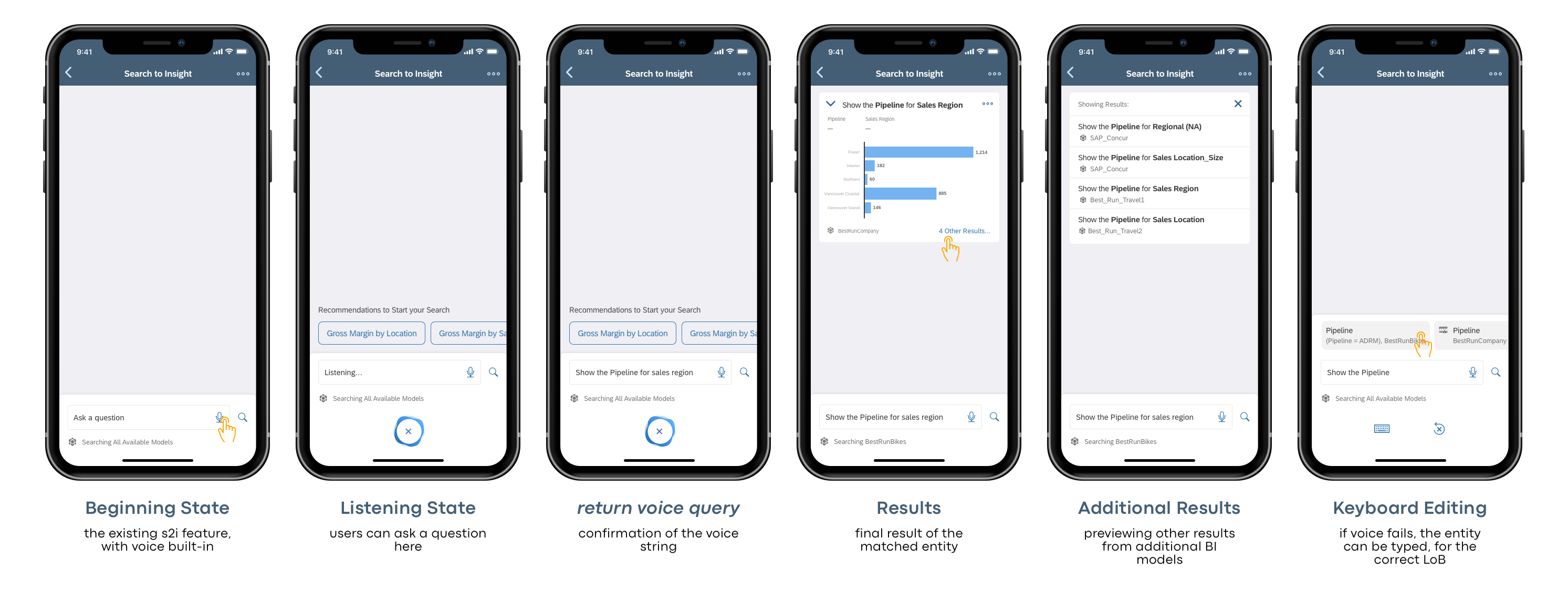

I enhanced our mobile app by integrating a voice-activated feature to provide business insights, building on the existing "Search to Insight" (S2I) functionality from the desktop. This feature uses natural language processing to help businesses gain insights from questions like "What were the sales for Q3 2021?”…which would generate a chart. The business goal was to replicate this experience on mobile…but with voice commands.

The project presented a unique challenge due to the need to determine the precise dataset a user wants, considering the complexity and variety of business models. On the desktop, users can type and directly select the appropriate model from suggestions. However, typing a complex question on mobile could be a bit cumbersome for a long question.

Problem area:

We need to reduce the friction of typing a long question on mobile...and instead replace with voice search to allow users to generate questions quicker

To address this, I helped to design Voice to Insight (V2I) feature...allowing users to speak their queries directly into the mobile app. This functionality was integrated with the existing text-based S2I feature on mobile, but with voice input!

Predicting the right model:

Most likely if organizations have setup their models correctly, we would return the most popular entity result once you speak your query. (Fig 1: "Beginning state" to "Return voice query" above)

Building in a fallback

We also built in the fallback in case voice fails, or you don't get the right results. Users can select a different model (Fig 1: Additional results), along with return to keyboard typing (Fig 1: Keyboard editing).

You can learn more about this in the above video, along with SAP's blog post.

project 2

Analytics Catalog

tldr, what's this project about?

Helping businesses discover their business content

🎯 Business Goal:

Allow admins to centralize allow for easy access of content...based on content targeting

Role:

Delivered the initial card and metadata container UI, along with future vision planning

Team:

3 designers, 5+ developers, 1 product manager

Timeline + Scope

8 months, delivered in Feb 2020

Overview

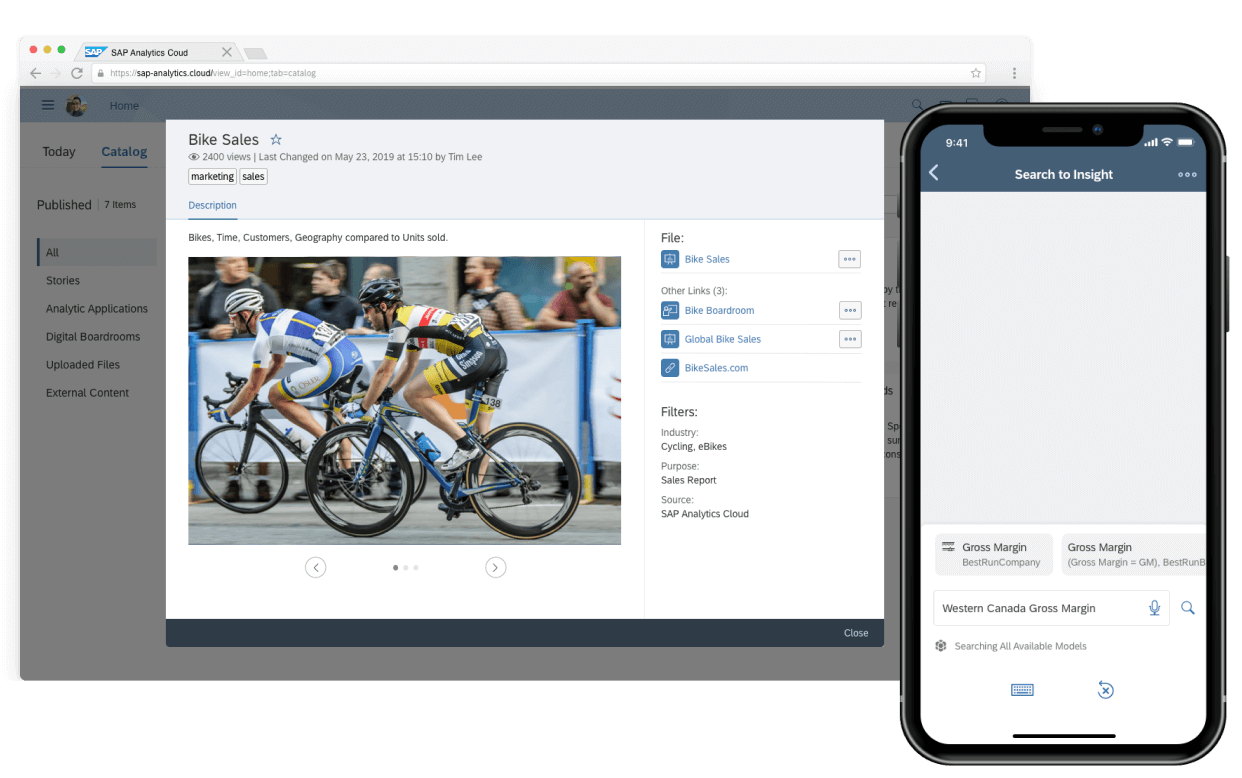

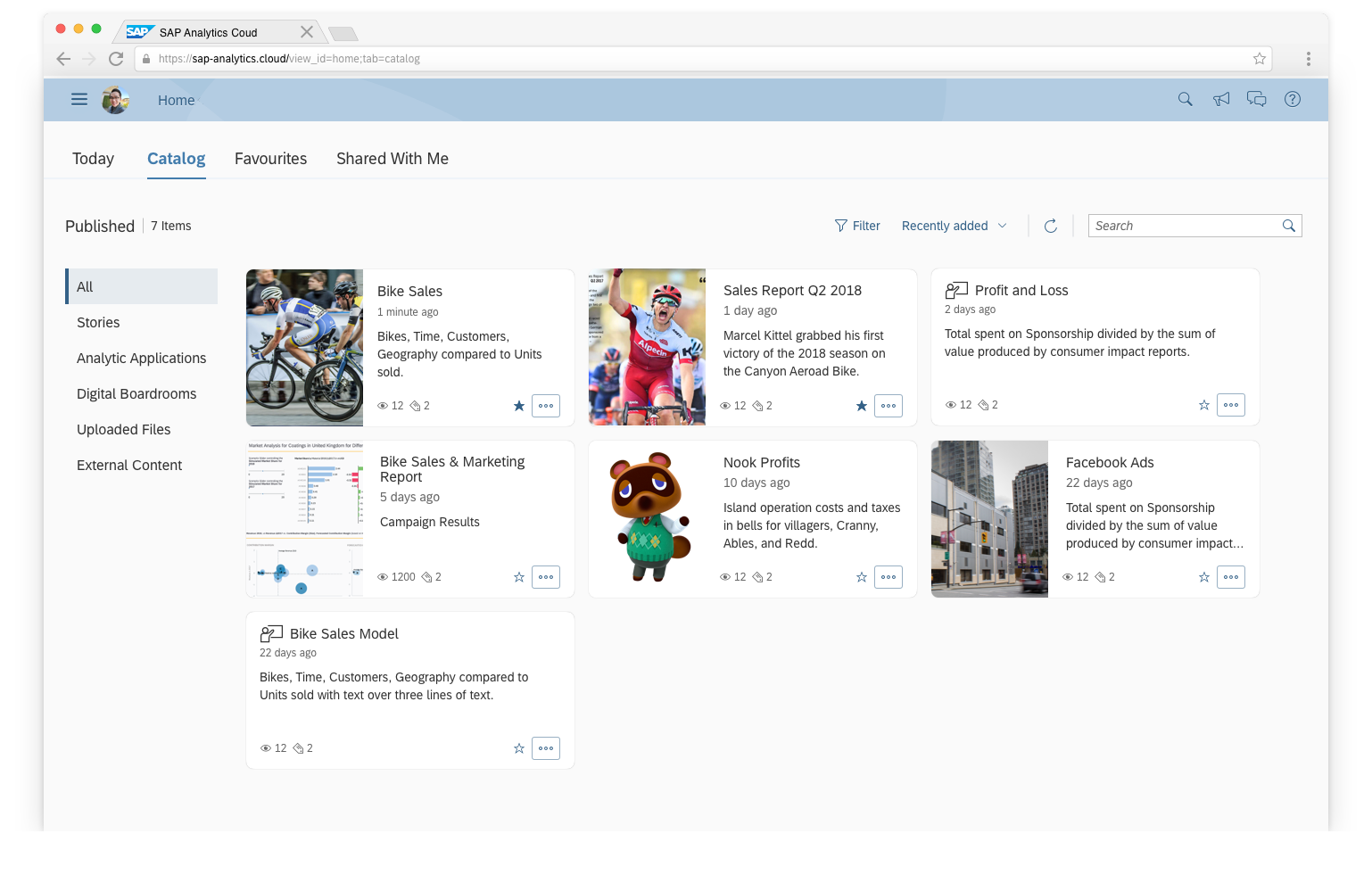

I also worked on Analytics Catalog, helping users discover content in a central area.

As an example, think about your Documents folder with probably multiple files, and you're trying to find one specific file tucked away in an obscure folder. Might not be so easy?

Problem area:

Users are having a hard time finding dashboards, datasets, and content in their file repository

How can we help admins centralize this information for their employees?

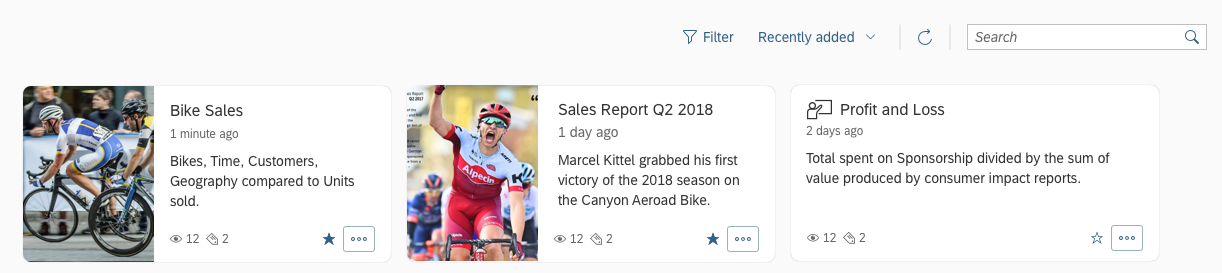

The solution was a guided browsing experience, with enhanced metadata and custom filters. By having a central place to discover content, there’s no need to show folders, but rather have everything in a single view, with content curated for each user based on their permissions.

Card design

I worked on the card design, that helps users get the right information about the context of the item. Actions are also enabled on the card with the "3-dot-button"

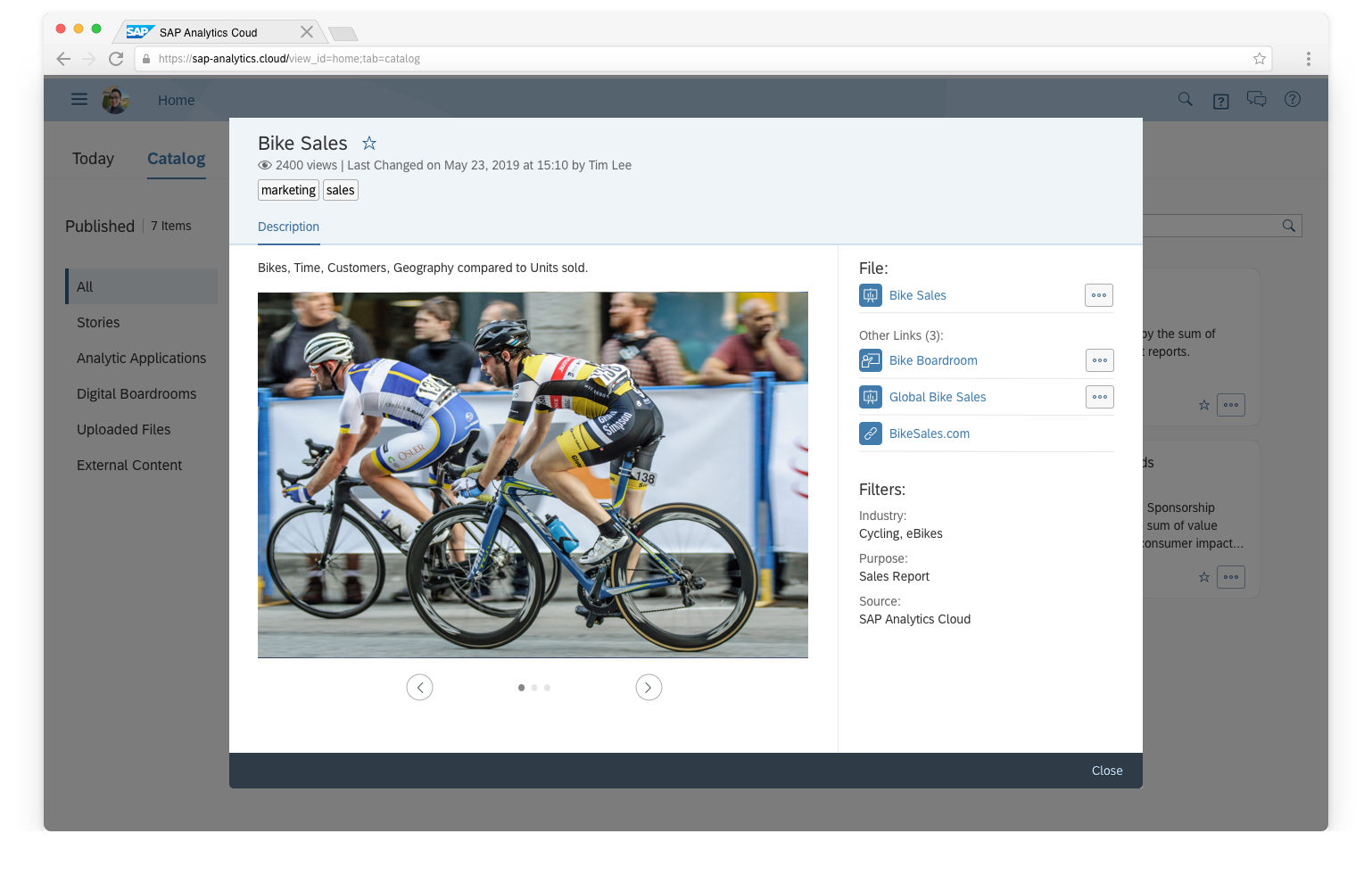

Metadata container

The metadata container appears upon clicking the card. Here this builds on the item details, along with giving additional context about the object itself through Other Links. This is user customizable, to give any additional metadata that a user may need.